Introduction

Updated for - cilium/[email protected]

K3s

I am a big fan of K3s, which is a lightweight distribution of Kubernetes that has a small CPU and memory footprint. It can run on a wide range of devices, from cloud VMs and bare metal to IoT edge devices like the Raspberry Pi. K3S is packaged as a single binary with all of its components, and it uses an embedded SQLite database.

This post is a step-by-step tutorial to help you install a single-node K3S Kubernetes cluster, which means that there will be only one Kubernetes node that will act as both the control plane and worker node. We will begin with setting up the necessary prerequisites on Debian Linux.

Single node cluster

It is perfect for testing and non-production deployments where the focus is on the application itself rather than its reliability or performance. Obviously, a single-node cluster does not offer high availability or failover capability. Despite this, you can interact with a single-node cluster in the same way as with a 100-node cluster.

Cilium

Cilium, a Kubernetes CNI, revolutionizes Kubernetes networking with its enhanced features, superior performance, and robust security capabilities. Its uniqueness stems from the injection of eBPF programs into the Linux kernel, creating a cloud-native compatible networking layer that utilizes Kubernetes identities over IP addresses. This, coupled with the ability to bypass parts of the network stack, results in improved performance.

Cilium is an open-source software that provides seamless network security. It operates in a transparent manner, ensuring that the network is secure without affecting its performance. For me, The L7 network policies offered by Cilium are an absolute game-changer, providing an unparalleled level of control and security…but for my homelab this meant rich application delivery features. Additionally, their service mesh implementation is shaping up to be a formidable addition to the network management toolkit which i have used yet.

Setup

K3s is very lightweight, but has some minimum requirements as outlined below. Review Prerequisites

Prerequisites

To follow along my guide, you need the following:

- 1 server with a fully installed Linux (I used Debian 12)

- Feel free to Install your typical and favorite tools

- SSH access

My target infrastructure in this tutorial is a small VM on Proxmox with these specifications:

- 4 CPU Cores

- 4GB RAM

- 50GB Disk

Install Helm

- Install Helm. e.g., I run the following on Debian Linux

$ curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3

$ chmod 700 get_helm.sh

$ ./get_helm.sh

Install K3S

- On the K3s host, run the install script. Check out the K3s documentation for more install options. In my deployment, I will be using Cilium with NGINX Ingress so I will not opt to install the bundled Flannel CNI or Traefik Ingress Controller

export K3S_KUBECONFIG_MODE="644"

export INSTALL_K3S_EXEC=" --flannel-backend=none --disable-network-policy --disable servicelb --disable traefik"

curl -sfL https://get.k3s.io | sh -

[INFO] Finding release for channel stable

[INFO] Using v1.28.4+k3s2 as release

[INFO] Downloading hash https://github.com/k3s-io/k3s/releases/download/v1.28.4+k3s2/sha256sum-amd64.txt

[INFO] Downloading binary https://github.com/k3s-io/k3s/releases/download/v1.28.4+k3s2/k3s

[INFO] Verifying binary download

[INFO] Installing k3s to /usr/local/bin/k3s

[INFO] Skipping installation of SELinux RPM

[INFO] Creating /usr/local/bin/kubectl symlink to k3s

[INFO] Creating /usr/local/bin/crictl symlink to k3s

[INFO] Creating /usr/local/bin/ctr symlink to k3s

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s.service

[INFO] systemd: Enabling k3s unit

Created symlink /etc/systemd/system/multi-user.target.wants/k3s.service → /etc/systemd/system/k3s.service.

[INFO] Host iptables-save/iptables-restore tools not found

[INFO] Host ip6tables-save/ip6tables-restore tools not found

[INFO] systemd: Starting k3s

Install Cilium

Before installing Cilium, ensure that your system meets the minimum requirements as most modern Linux distributions should already do: System Requirements

Mounted eBPF filesystem

- Cilium automatically mounts the eBPF filesystem to persist eBPF resources across restarts of the cilium-agent. This ensures the datapath continues to operate even after the agent is restarted or upgraded. You can also mount the filesystem before deploying Cilium by running the command below only once during the boot process of the machine

sudo mount bpffs -t bpf /sys/fs/bpf

sudo bash -c 'cat <<EOF >> /etc/fstab

none /sys/fs/bpf bpf rw,relatime 0 0

EOF'

- Check the

/etc/fstab:

cat /etc/fstab

# you should see:

none /sys/fs/bpf bpf rw,relatime 0 0

- Reload

fstab

sudo systemctl daemon-reload

sudo systemctl restart local-fs.target

Install the Cilium with helm

I prefer using Helm charts to set up Cilium because they are simpler and better suited for production environments. Alternatively, you can install Cilium using the CLI

- Add the helm repo, update, and install Cilium with your values. Below is my setup:

Note: Since the cilium operator defaults to two replicas and I have only a single node cluster one cilium-operator will remain in a PENDING State unless the ** operator.replicas=1 is added

For other options, See the Cilium Helm Reference

helm repo add cilium https://helm.cilium.io/

helm repo update

export CILIUM_NAMESPACE=cilium

export VERSION=1.16.3

# FOR SINGLE NODE, SET operator.replicas=1

# NOTE: The `tunnel` option (deprecated in Cilium 1.14) has been removed. To enable native-routing mode, set `routingMode=native` (previously `tunnel=disabled`).

helm upgrade --install cilium cilium/cilium \

--create-namespace \

--namespace $CILIUM_NAMESPACE \

--set operator.replicas=1 \

--set ipam.operator.clusterPoolIPv4PodCIDRList=10.42.0.0/16 \

--set ipv4NativeRoutingCIDR=10.42.0.0/16 \

--set ipv4.enabled=true \

--set loadBalancer.mode=dsr \

--set routingMode=native \

--set autoDirectNodeRoutes=true \

--set hubble.relay.enabled=true \

--set hubble.ui.enabled=true \

--set l2announcements.enabled=true \

--set kubeProxyReplacement=true

- Wait for Cilium to finish install

# Watch and wait

export CILIUM_NAMESPACE=cilium

watch kubectl get pods -n $CILIUM_NAMESPACE

NAME READY STATUS RESTARTS AGE

cilium-operator-7b48f9fd58-pcdt9 1/1 Running 0 97m

cilium-hldps 1/1 Running 0 97m

hubble-ui-647f4487ff-w6qwn 2/2 Running 0 97m

cilium-envoy-7x7cq 1/1 Running 0 97m

hubble-relay-685f5f58fb-w4xsx 1/1 Running 0 97m

- Install the Cilium CLI on the K3s node

export CILIUM_CLI_VERSION=$(curl -s https://raw.githubusercontent.com/cilium/cilium-cli/main/stable.txt)

export CLI_ARCH=amd64

if [ "$(uname -m)" = "aarch64" ]; then CLI_ARCH=arm64; fi

curl -L --fail --remote-name-all https://github.com/cilium/cilium-cli/releases/download/${CILIUM_CLI_VERSION}/cilium-linux-${CLI_ARCH}.tar.gz{,.sha256sum}

sha256sum --check cilium-linux-${CLI_ARCH}.tar.gz.sha256sum

sudo tar xzvf cilium-linux-${CLI_ARCH}.tar.gz /usr/local/bin

rm cilium-linux-${CLI_ARCH}.tar.gz{,.sha256sum}

```

4. Validate the Installation that was done with helm using cilium cli

```bash

export CILIUM_NAMESPACE=cilium

cilium status --wait -n $CILIUM_NAMESPACE

/¯¯\

/¯¯\__/¯¯\ Cilium: OK

\__/¯¯\__/ Operator: OK

/¯¯\__/¯¯\ Envoy DaemonSet: OK

\__/¯¯\__/ Hubble Relay: OK

\__/ ClusterMesh: disabled

Deployment hubble-relay Desired: 1, Ready: 1/1, Available: 1/1

Deployment cilium-operator Desired: 1, Ready: 1/1, Available: 1/1

DaemonSet cilium-envoy Desired: 1, Ready: 1/1, Available: 1/1

DaemonSet cilium Desired: 1, Ready: 1/1, Available: 1/1

Deployment hubble-ui Desired: 1, Ready: 1/1, Available: 1/1

Containers: cilium Running: 1

cilium-operator Running: 1

cilium-envoy Running: 1

hubble-ui Running: 1

hubble-relay Running: 1

Cluster Pods: 43/43 managed by Cilium

Helm chart version: 1.16.3

Image versions cilium quay.io/cilium/cilium:v1.16.3@sha256:62d2a09bbef840a46099ac4c69421c90f84f28d018d479749049011329aa7f28: 1

cilium-operator quay.io/cilium/operator-generic:v1.16.3@sha256:6e2925ef47a1c76e183c48f95d4ce0d34a1e5e848252f910476c3e11ce1ec94b: 1

cilium-envoy quay.io/cilium/cilium-envoy:v1.29.9-1728346947-0d05e48bfbb8c4737ec40d5781d970a550ed2bbd@sha256:42614a44e508f70d03a04470df5f61e3cffd22462471a0be0544cf116f2c50ba: 1

hubble-ui quay.io/cilium/hubble-ui:v0.13.1@sha256:e2e9313eb7caf64b0061d9da0efbdad59c6c461f6ca1752768942bfeda0796c6: 1

hubble-ui quay.io/cilium/hubble-ui-backend:v0.13.1@sha256:0e0eed917653441fded4e7cdb096b7be6a3bddded5a2dd10812a27b1fc6ed95b: 1

hubble-relay quay.io/cilium/hubble-relay:v1.16.3@sha256:feb60efd767e0e7863a94689f4a8db56a0acc7c1d2b307dee66422e3dc25a089: 1

- Run a cilium connectivity test

# Example output

export CILIUM_NAMESPACE=cilium

cilium connectivity test -n $CILIUM_NAMESPACE

armand@beelink:~/homelab$ cilium connectivity test -n $CILIUM_NAMESPACE

ℹ️ Monitor aggregation detected, will skip some flow validation steps

ℹ️ Skipping tests that require a node Without Cilium

⌛ [default] Waiting for deployment cilium-test/client to become ready...

⌛ [default] Waiting for deployment cilium-test/client2 to become ready...

⌛ [default] Waiting for deployment cilium-test/echo-same-node to become ready...

⌛ [default] Waiting for deployment cilium-test/echo-other-node to become ready...

⌛ [default] Waiting for CiliumEndpoint for pod cilium-test/client-6b4b857d98-lc2tv to appear...

⌛ [default] Waiting for CiliumEndpoint for pod cilium-test/client2-646b88fb9b-n2fz9 to appear...

⌛ [default] Waiting for pod cilium-test/client2-646b88fb9b-n2fz9 to reach DNS server on cilium-test/echo-same-node-557b988b47-gm2p4 pod...

⌛ [default] Waiting for pod cilium-test/client-6b4b857d98-lc2tv to reach DNS server on cilium-test/echo-same-node-557b988b47-gm2p4 pod...

⌛ [default] Waiting for pod cilium-test/client-6b4b857d98-lc2tv to reach DNS server on cilium-test/echo-other-node-78455455d5-swvcj pod...

⌛ [default] Waiting for pod cilium-test/client2-646b88fb9b-n2fz9 to reach DNS server on cilium-test/echo-other-node-78455455d5-swvcj pod...

⌛ [default] Waiting for pod cilium-test/client-6b4b857d98-lc2tv to reach default/kubernetes service...

⌛ [default] Waiting for pod cilium-test/client2-646b88fb9b-n2fz9 to reach default/kubernetes service...

⌛ [default] Waiting for CiliumEndpoint for pod cilium-test/echo-other-node-78455455d5-swvcj to appear...

⌛ [default] Waiting for CiliumEndpoint for pod cilium-test/echo-same-node-557b988b47-gm2p4 to appear...

⌛ [default] Waiting for Service cilium-test/echo-other-node to become ready...

⌛ [default] Waiting for Service cilium-test/echo-other-node to be synchronized by Cilium pod cilium/cilium-j4hn2

⌛ [default] Waiting for Service cilium-test/echo-same-node to become ready...

⌛ [default] Waiting for Service cilium-test/echo-same-node to be synchronized by Cilium pod cilium/cilium-j4hn2

⌛ [default] Waiting for NodePort 172.16.222.193:30418 (cilium-test/echo-same-node) to become ready...

⌛ [default] Waiting for NodePort 172.16.222.193:30160 (cilium-test/echo-other-node) to become ready...

⌛ [default] Waiting for NodePort 172.16.222.191:30418 (cilium-test/echo-same-node) to become ready...

⌛ [default] Waiting for NodePort 172.16.222.191:30160 (cilium-test/echo-other-node) to become ready...

⌛ [default] Waiting for NodePort 172.16.222.194:30418 (cilium-test/echo-same-node) to become ready...

⌛ [default] Waiting for NodePort 172.16.222.194:30160 (cilium-test/echo-other-node) to become ready...

⌛ [default] Waiting for NodePort 172.16.222.2:30418 (cilium-test/echo-same-node) to become ready...

⌛ [default] Waiting for NodePort 172.16.222.2:30160 (cilium-test/echo-other-node) to become ready...

⌛ [default] Waiting for NodePort 172.16.222.192:30418 (cilium-test/echo-same-node) to become ready...

⌛ [default] Waiting for NodePort 172.16.222.192:30160 (cilium-test/echo-other-node) to become ready...

ℹ️ Skipping IPCache check

🔭 Enabling Hubble telescope...

⚠️ Unable to contact Hubble Relay, disabling Hubble telescope and flow validation: rpc error: code = Unavailable desc = connection error: desc = "transport: Error while dialing: dial tcp 127.0.0.1:4245: connect: connection refused"

ℹ️ Expose Relay locally with:

cilium hubble enable

cilium hubble port-forward&

ℹ️ Cilium version: 1.15.1

🏃 Running tests...

[=] Test [no-policies]

..................................

[=] Test [no-policies-extra]

....................

[=] Test [allow-all-except-world]

....................

[=] Test [client-ingress]

..

[=] Test [client-ingress-knp]

..

[=] Test [allow-all-with-metrics-check]

....

[=] Test [all-ingress-deny]

........

[=] Test [all-ingress-deny-knp]

............

[=] Test [all-entities-deny]

........

[=] Test [cluster-entity]

..

[=] Test [host-entity]

..........

[=] Test [echo-ingress]

....

[=] Test [echo-ingress-knp]

....

[=] Test [client-ingress-icmp]

..

[=] Test [client-egress]

....

[=] Test [client-egress-knp]

....

[=] Test [client-egress-expression]

....

[=] Test [client-egress-expression-knp]

....

[=] Test [client-with-service-account-egress-to-echo]

....

[=] Test [client-egress-to-echo-service-account]

....

[=] Test [to-entities-world]

......

[=] Test [to-cidr-external]

....

[=] Test [to-cidr-external-knp]

....

[=] Test [echo-ingress-from-other-client-deny]

......

[=] Test [client-ingress-from-other-client-icmp-deny]

......

[=] Test [client-egress-to-echo-deny]

......

[=] Test [client-ingress-to-echo-named-port-deny]

....

[=] Test [client-egress-to-echo-expression-deny]

....

[=] Test [client-with-service-account-egress-to-echo-deny]

....

[=] Test [client-egress-to-echo-service-account-deny]

..

[=] Test [client-egress-to-cidr-deny]

....

[=] Test [client-egress-to-cidr-deny-default]

....

[=] Test [health]

....

[=] Skipping Test [north-south-loadbalancing] (Feature node-without-cilium is disabled)

...

[=] Test [pod-to-pod-encryption] ...

[=] Test [node-to-node-encryption] ... [=] Skipping Test [egress-gateway-excluded-cidrs] (Feature enable-ipv4-egress-gateway is disabled) [=] Skipping Test [pod-to-node-cidrpolicy] (Feature cidr-match-nodes is disabled) [=] Skipping Test [north-south-loadbalancing-with-l7-policy] (Feature node-without-cilium is disabled) [=] Test [echo-ingress-l7] ............ [=] Test [echo-ingress-l7-named-port] ............ [=] Test [client-egress-l7-method] ............ [=] Test [client-egress-l7] .......... [=] Test [client-egress-l7-named-port] .......... [=] Skipping Test [client-egress-l7-tls-deny-without-headers] (Feature secret-backend-k8s is disabled) [=] Skipping Test [client-egress-l7-tls-headers] (Feature secret-backend-k8s is disabled) [=] Skipping Test [client-egress-l7-set-header] (Feature secret-backend-k8s is disabled) [=] Skipping Test [echo-ingress-auth-always-fail] (Feature mutual-auth-spiffe is disabled) [=] Skipping Test [echo-ingress-mutual-auth-spiffe] (Feature mutual-auth-spiffe is disabled) [=] Skipping Test [pod-to-ingress-service] (Feature ingress-controller is disabled) [=] Skipping Test [pod-to-ingress-service-deny-all] (Feature ingress-controller is disabled) [=] Skipping Test [pod-to-ingress-service-allow-ingress-identity] (Feature ingress-controller is disabled) [=] Test [dns-only] .......... [=] Test [to-fqdns] ........ ✅ All 44 tests (321 actions) successful, 12 tests skipped, 0 scenarios skipped.

Done. It is installed!

Install Cilium LB IPAM (Load Balancer)

Version 1.13 of Cilium introduces the LoadBalancer IP Address Management (LB IPAM) feature, which eliminates the need for MetalLB. This simplifies operations as it reduces the number of components that need to be managed from two (CNI and MetalLB) to just one. The LB IPAM feature works seamlessly with Cilium BGP Control Plane functionality, which is already integrated.

The LB IPAM feature does not need to be deployed explicitly. Upon adding a Custom Resource Definition (CRD) named CiliumLoadBalancerIPPool to the cluster, the IPAM controller automatically activates. You can find the YAML manifest that was applied to activate this feature below.

For further examples, check out the [LoadBalancer IP Address Management (LB IPAM) documentation](https://docs.cilium.io/en/stable/network/lb-ipam/#loadbalancer-ip-address-management-lb-ipam.

- Create a CiliumLoadBalancerIPPool manifest file, e.g.,

CiliumLoadBalancerIPPool.yaml, also shown below, where172.16.111.0/24is part of my192.168.0.0/16LAN

apiVersion: "cilium.io/v2alpha1"

kind: CiliumLoadBalancerIPPool

metadata:

name: "lb-pool"

namespace: Cilium

spec:

blocks:

- cidr: "172.16.111.0/24"

- Create the

CiliumLoadBalancerIPPoolby applying the manifest

kubectl create -f CiliumLoadBalancerIPPool.yaml

ciliumloadbalancerippool.cilium.io/lb-pool created

- After adding the pool to the cluster, it appears like this:

kubectl get ippools

NAME DISABLED CONFLICTING IPS AVAILABLE AGE

lb-pool false False 254 1s

- We can test it by creating a test

loadbalancerservice. Create theloadbalancermanifest file, e.g.,test-lb.yaml, as shown below. Note: Just to testLoadBalanceris created - This creates the service only without routing to any application

apiVersion: v1

kind: Service

metadata:

name: test

spec:

type: LoadBalancer

ports:

- port: 1234

- Create the

LoadBalancerservice by applying the manifestkubectl create -f test-lb.yaml service/test created - Let’s see if it has an

EXTERNAL-IP. In my example below, there is an external IP of172.16.111.72! There is no application behind this service, so there is nothing more to test other than seeing the EXTERNAL-IP provisioned.

kubectl get svc test

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

test LoadBalancer 10.43.28.135 172.16.111.72 1234:32514/TCP 1s

Install Cilium L2 Announcements (No need for MetalLB!)

In the last step, CiliumLoadBalancerIPPool provisions External IP addresses. However, these addresses are not yet routable on the LAN as they are not announced. We have two options to make them accessible:

- We could use the Cilium BGP control plane feature. A good blog post about it is available here

- Alternatively, without any BGP configuration needed on the upstream router, we can use L2 Announcements, which are available from Cilium version 1.14. This feature can be enabled by setting the

l2announcements.enabled=trueflag.

L2 Announcements allow services to be seen and accessed over the local area network. This functionality is mainly aimed at on-premises setups in environments that do not use BGP-based routing, like office or campus networks. Users who had depended on MetalLB for similar capabilities discovered they could entirely eliminate MetalLB from their configurations and streamline them with the Cilium CNI.

l2announcements.enabled=truewas already enabled with my initial helm install. Note: to enable L2 Announcements after deploying Cilium, we can do ahelm upgrade:

export CILIUM_NAMESPACE=cilium

helm upgrade cilium cilium/cilium \

--namespace $CILIUM_NAMESPACE \

--reuse-values \

--set l2announcements.enabled=true

Next, we need a CiliumL2AnnouncementPolicy to start L2 announcements and specify where that takes place.

- Before we create a

CiliumL2AnnouncementPolicy, let’s get the network interface name of the Kubernetes nodes. On each node collect this information:

ip addr

# I see this on VM I see ens18 and altname enp0s18:

2: ens18: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether bc:24:11:64:27:ba brd ff:ff:ff:ff:ff:ff

altname enp0s18

- Also, get an identifying label of the worker nodes. In a single-node cluster the worker node is also the master/control plane node

Since k3s worker nodes do not have a role label node-role.kubernetes.io/control-plan on the worker nodes, we can use this label to instruct Cilium to do L2 announcements on worker nodes only (Since my control plane is also a worker node, this would mean L2 announcements would not get scheduled on the master node)

# Where k3s is the single node in the cluster

kubectl describe node k3s

# Example output

Name: k3s

Roles: control-plane,master

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/instance-type=k3s

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=k3s

kubernetes.io/os=linux

node-role.kubernetes.io/control-plane=true #<--use this!

node-role.kubernetes.io/master=true

node.kubernetes.io/instance-type=k3s

# etc...

And so we will use the following matchExpressions in our CiliumL2AnnouncementPolicy:

nodeSelector:

matchExpressions:

- key: node-role.kubernetes.io/control-plane

operator: Exists

Let’s break down the snippet:

Match Expressions: This is a section of a Kubernetes resource configuration that defines a set of conditions that must be satisfied for a certain rule to apply. In this case, it’s specifying a set of conditions for matching nodes.Key: node-role.kubernetes.io/control-plane: This is one of the conditions defined in the match expression. It specifies a key or label that Kubernetes will check on nodes.Operator: Exist: This is the operator used to evaluate the condition. In this case, it’s checking if the label with the keynode-role.kubernetes.io/control-planedoes exist on nodes - which it does on a control plane node, i.e. our single node in our cluster.

- Create the L2 Announcement policy such as

CiliumL2AnnouncementPolicy.yaml, also shown below:

apiVersion: cilium.io/v2alpha1

kind: CiliumL2AnnouncementPolicy

metadata:

name: loadbalancerdefaults

namespace: Cilium

spec:

nodeSelector:

matchExpressions:

- key: node-role.kubernetes.io/control-plane

operator: Exists

interfaces:

- ens18

- enp0s18

externalIPs: true

loadBalancerIPs: true

- Create

CiliumL2AnnouncementPolicyby applying the manifest

kubectl apply -f CiliumL2AnnouncementPolicy.yaml

ciliuml2announcementpolicy.cilium.io/loadbalancerdefaults created

- Let’s confirm it’s creating the

CiliumL2AnnouncementPolicy, we can runkubectl describeon it

kubectl get CiliumL2AnnouncementPolicy

NAME AGE

loadbalancerdefaults 1s

kubectl describe CiliumL2AnnouncementPolicy loadbalancerdefaults

Name: loadbalancerdefaults

Namespace:

Labels: <none>

Annotations: <none>

API Version: cilium.io/v2alpha1

Kind: CiliumL2AnnouncementPolicy

Metadata:

Creation Timestamp: 2023-12-19T22:40:54Z

Generation: 1

Resource Version: 11013

UID: 47cc185f-5f9f-4536-99fc-3106703c603e

Spec:

External I Ps: true

Interfaces:

ens18

enp0s18

Load Balancer I Ps: true

Node Selector:

Match Expressions:

Key: node-role.kubernetes.io/control-plane

Operator: Exists

Events: <none>

- The leases are created in the same namespace where Cilium is deployed, in my case,

cilium. You can inspect the leases with the following command:

kubectl get leases -n cilium

# Example output

NAME HOLDER AGE

cilium-operator-resource-lock k3s-PPgDRsPrLH 3d3h

cilium-l2announce-kube-system-kube-dns k3s 2d22h

cilium-l2announce-kube-system-metrics-server k3s 2d22h

cilium-l2announce-cilium-test-echo-same-node k3s 2m43s

Troubleshooting

If you do not get a lease (which happen to me the first time), be sure to check:

- Your

CiliumLoadBalancerIPPool(e.g.,172.16.111.0/24) is part of your LAN network (e.g.,172.16.0.0/16) and also - Your

matchExpressionsin yourCiliumL2AnnouncementPolicyis accurate, so that you are scheduling to a node

In a frantic state of applying all sorts of changes, you may have an error. If so try fixing might need to try deleting CiliumLoadBalancerIPPool and CiliumL2AnnouncementPolicy, then reboot your Kubernetes nodes before reapplying the manifests again. Note: Some troubleshooting notes I had referenced: https://github.com/cilium/cilium/issues/26996

Configure kubectl and remote access to K3s cluster

To interact with the K3s Kubernetes cluster, you need to configure the kubectl command-line tool to communicate with the K3s API server. The K3s installation script install kubectl binary automatically for you.

The notes below are if you want to install kubectl and access the cluster remotely

- Run the following commands to configure

kubectl

mkdir ~/.kube

sudo cp /etc/rancher/k3s/k3s.yaml ~/.kube/config && sudo chown $USER ~/.kube/config

sudo chmod 600 ~/.kube/config && export KUBECONFIG=~/.kube/config

- To make life easier you can add a short alias and enable auto-complete

source <(kubectl completion bash) # set up autocomplete in bash into the current shell, bash-completion package should be installed first.

echo "source <(kubectl completion bash)" >> ~/.bashrc # add autocomplete permanently to your bash shell.

# Shortcut alias

echo 'alias k=kubectl' >>~/.bashrc

# Enable autocomplete for the alias too

echo 'complete -F __start_kubectl k' >>~/.bashrc

# reload bash config

source ~/.bashrc

- To ensure everything is set up correctly, let’s verify our cluster’s status:

kubectl get nodes

kubectl cluster-info

kubectl cluster-info

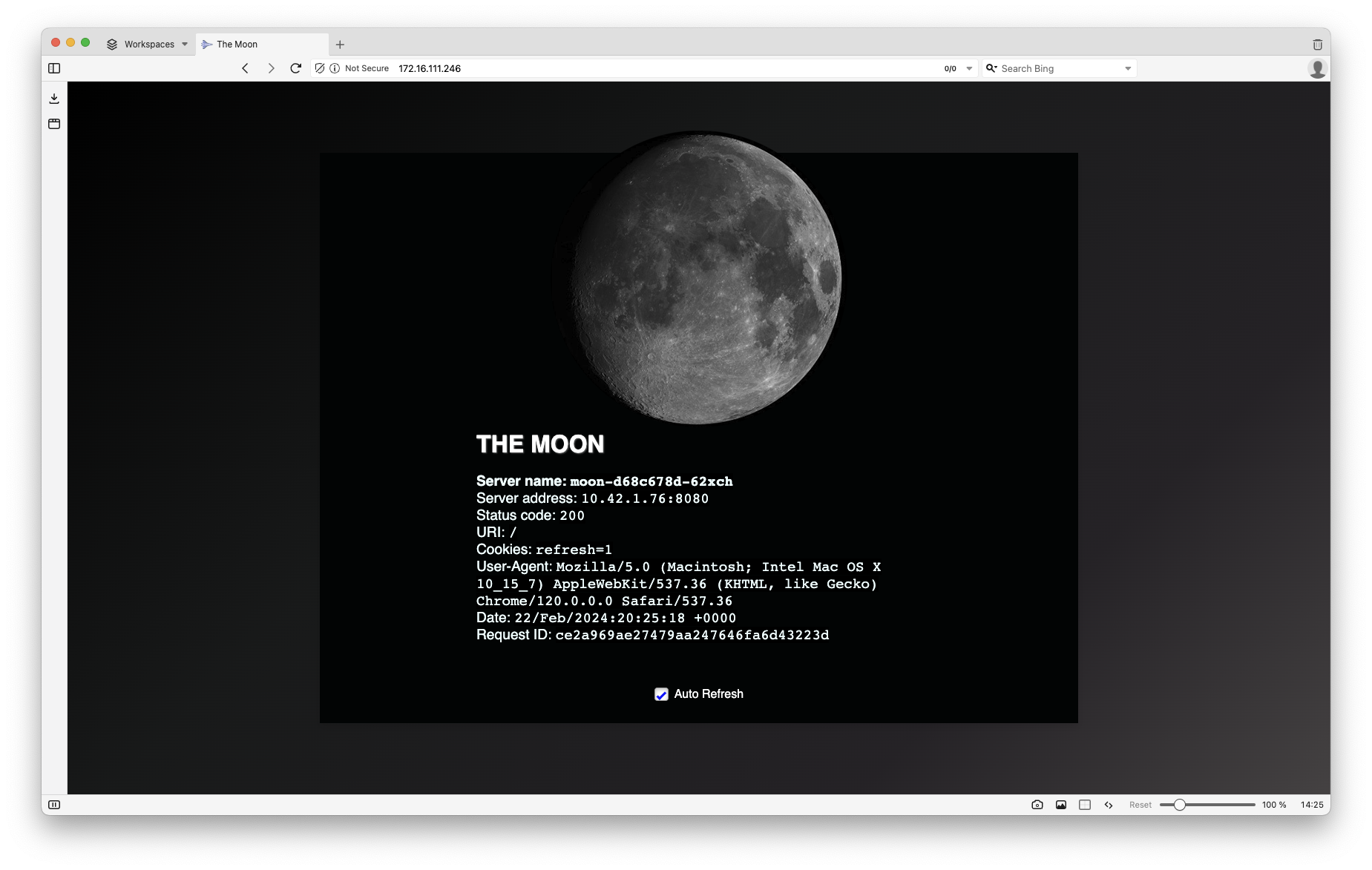

Time to test: Deploy an example application

We are ready to deploy our first application if you have got this far without issues!

I have provided a yaml manifest to deploy a sample application and expose it outside your Kubernetes cluster with metal LB.

- Download

the-moon-all-in-one.yaml. Review the file, “the-moon-all-in-one.yaml” if you want

curl https://gist.githubusercontent.com/armsultan/f674c03d4ba820a919ed39c8ca926ea2/raw/c408f4ccd0b23907c5c9ed7d73b14ae3fd0d30e1/the-moon-all-in-one.yaml >> the-moon-all-in-one.yaml

- Apply the

yamlmanifest usingkubectl

kubectl apply -f the-moon-all-in-one.yaml

- We can see everything being created using the

watchcommand in combination withkubectl

watch kubectl get all -n solar-system -o wide

# Example output

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/moon-7bcd85b4c4-6q9q9 1/1 Running 3 (17m ago) 3d1h 10.42.0.129 k3s <none> <none>

pod/moon-7bcd85b4c4-bcrw7 1/1 Running 3 (17m ago) 3d1h 10.42.0.136 k3s <none> <none>

pod/moon-7bcd85b4c4-p4vzq 1/1 Running 3 (17m ago) 3d1h 10.42.0.186 k3s <none> <none>

pod/moon-7bcd85b4c4-4v278 1/1 Running 3 (17m ago) 3d1h 10.42.0.27 k3s <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/moon-lb-svc LoadBalancer 10.43.76.189 172.16.111.68 80:31100/TCP 3d1h app=moon

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/moon 4/4 4 4 3d1h moon armsultan/solar-system:moon-nonroot app=moon

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

replicaset.apps/moon-7bcd85b4c4 4 4 4 3d1h moon armsultan/solar-system:moon-nonroot app=moon,pod-template-hash=7bcd85b4c4

In the example output above, my Cilium-powered LoadBalancer and L2 announcement that has exposed my “moon” application on the IP address 172.16.111.68

curl 172.16.111.246 -s | grep title\>

<title>The Moon</title>

…and that my friend, is that moon.

- If you would like to delete this deployment, we can simply delete everything in namespace “

solar-system”

kubectl delete namespace solar-system

We have our K3s cluster up and running with Cilium!